Amazon is a giant in the e-commerce industry, and they’re always looking for talented data scientists to join their ranks. Data scientists have become increasingly important to Amazon’s success, and with their ever-growing list of products and services, this demand is only going to increase.

If you are looking for an opportunity to become a data scientist at Amazon, you must be prepared for the interview process. Amazon is known for their rigorous interview process, and you’ll need to be prepared for a variety of questions.

From technical questions about SQL and Python programming to questions about big data, machine learning, and statistics, Amazon data scientist interviews can be grueling. That’s why we’ve compiled this list of Amazon data scientist interview questions and answers. This list will provide you with a great starting point to prepare for a successful Amazon data scientist interview.

We’ll start by giving you a few tips and tricks to help you prepare for the interview. Then, we’ll move on to the questions themselves and provide detailed answers that you can use to practice. Finally, we’ll discuss some common follow-up questions that you may face during the interview, along with some advice on how to handle them.

With the help of this blog, you’ll have all the resources you need to get ready for your Amazon data scientist interview. By taking the time to prepare and practice, you’ll increase your chances of success and show Amazon that you are the right candidate for the job.

Overview of Amazon Data Scientist Interview Process

The Amazon Data Scientist interview process is an extensive and thorough process. The process typically starts with a phone screen, either a recorded or live call with a recruiter. During this call, the recruiter will ask questions to assess the candidate’s experience, skills, and qualifications. If the initial phone screen is successful, the candidate will be invited to complete an online assessment which will assess the candidate’s technical and problem- solving abilities.

The next step in the Amazon Data Scientist interview process is a technical interview. During the technical interview, the candidate will be asked questions about data structures, algorithms, and machine learning. This interview will assess the candidate’s technical understanding and ability to apply that understanding to solving problems.

The next step in the Amazon Data Scientist interview process is a behavioral interview. During this interview, the interviewer will ask questions to assess the candidate’s work experience, communication skills, and team collaboration. The interviewer will also assess the candidate’s problem- solving skills and ability to think on their feet.

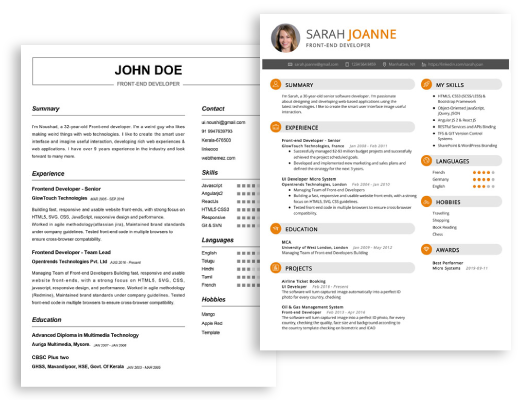

Start building your dream career today!

Create your professional resume in just 5 minutes with our easy-to-use resume builder!

Be sure to check out our resume examples, resume templates, resume formats, cover letter examples, job description, and career advice pages for more helpful tips and advice.

The final stage in the Amazon Data Scientist interview process is a remote case interview. During this interview, the candidate will be presented with a complex data science problem and asked to develop an algorithmic solution. The interviewer will assess the candidate’s ability to think critically, problem- solve, and analyze data. If the candidate is successful in this portion of the interview process, they will be invited to a final in- person onsite interview. The onsite interview is an opportunity for the candidate to demonstrate their skills and knowledge in a more comprehensive and detailed way.

Overall, the Amazon Data Scientist interview process is an intense and comprehensive interview process that assesses the candidate’s technical abilities, problem- solving skills, and communication capabilities. The entire process can take up to several months, depending on the complexity of the position and the candidate’s availability.

Top 18 Amazon Data Scientist Interview Questions and Answers

1. What is the most challenging data science project you have worked on?

The most challenging data science project I’ve worked on was a project for a large retail company. The goal of the project was to develop a predictive pricing model for their products. The challenge was twofold. First, there was a large amount of data that needed to be processed and cleaned in order to create a meaningful model. Second, the data had many outliers and varying distributions that could not be easily corrected. To overcome this challenge, I utilized a combination of statistical techniques, such as outlier detection and data transformations, to create a more accurate model. In the end, I was able to build a model that was able to accurately predict product pricing and was well-received by the client.

2. What is your experience working with machine learning algorithms?

I have extensive experience working with a variety of machine learning algorithms, including linear and logistic regression, decision trees, random forests, and neural networks. I have worked on projects that required the use of different algorithms to obtain the best results, and I am familiar with the process of tuning hyperparameters to maximize model performance. I have also implemented different methods for dealing with imbalanced data.

3. Describe a project you have worked on that incorporated data visualization.

I recently worked on a project for a retail company in which we were tasked with creating a dashboard to visualize their sales data. The dashboard needed to include interactive charts that would give users the ability to quickly view trends in sales and compare sales across different time periods. To achieve this, we used a combination of libraries, such as D3.js and Plotly, to create interactive charts that could be used to explore the data. The dashboard was well-received and has been used by the company to better understand their sales data.

4. How do you handle missing data in your projects?

When dealing with missing data, I typically use one of two approaches. If there is a small amount of missing data, I use imputation techniques to fill in the missing values. This approach works well when only a few data points are missing. On the other hand, if the amount of missing data is too large to be filled in with imputation, I try to identify the source of the missing data and either find a way to obtain the missing data or remove the affected data points from the dataset. Regardless of the approach used, it is important to ensure that the data is properly handled and that decision-making is not affected by the missing data.

5. How do you go about testing a machine learning model?

When testing a machine learning model, I typically use a combination of evaluation metrics and visualization techniques to ensure the model is performing as expected. I begin by splitting the data into training and test sets and then fitting the model on the training data. Once the model has been trained, I evaluate the model’s performance on the test data using metrics such as precision, recall, and accuracy. Additionally, I use visualization techniques such as confusion matrices and ROC curves to get a better understanding of the model’s performance. This helps me to identify any areas in which the model can be improved.

6. What methods do you use to prevent overfitting in your models?

There are a few methods that I use to prevent overfitting in my models. The first is to use regularization techniques, such as L1 and L2 regularization, which help to reduce the complexity of the model and prevent the model from learning patterns that are too specific to the training data. The second is to use cross-validation techniques, such as k-fold cross-validation, which allow for a better assessment of the model’s performance by evaluating the model’s performance on multiple different datasets. Finally, I use techniques such as early stopping and dropout, which help to reduce overfitting by preventing the model from learning too many details from the training data.

7. How do you select features for your machine learning models?

When selecting features for my machine learning models, I typically use feature selection algorithms, such as recursive feature elimination and random forest feature selection. These algorithms allow me to identify the most important features in the dataset and use them as inputs to the model. Additionally, I use techniques such as dimensionality reduction algorithms, such as principal component analysis, to reduce the number of features in the dataset while still maintaining the important information. By using these methods, I am able to select the most important features in the dataset and use them to build more accurate models.

8. How do you handle outliers in your data?

When dealing with outliers in my data, I typically use a combination of methods. The first is to use outlier detection algorithms, such as k-means clustering and Isolation Forest, to identify any outliers in the dataset. Once the outliers have been identified, I then use a variety of methods to handle the outliers. This can include removing the outliers from the dataset, replacing the outliers with the mean or median values, or using data transformations to normalize the data. By using these methods, I am able to identify and handle any outliers in the dataset.

9. How do you go about validating your machine learning models?

When validating my machine learning models, I typically use methods such as train/test split, cross-validation, and out-of-sample testing. By splitting the data into training and test sets, I am able to assess the model’s performance on data that has not been used to train the model. Additionally, I use cross-validation techniques, such as k-fold cross-validation, to further evaluate the model’s performance by measuring the model’s performance on multiple different datasets. Finally, I use out-of-sample testing to make sure the model generalizes to data it has not seen before.

10. What techniques do you use to optimize your models?

To optimize my models, I typically use a combination of techniques. This can include tuning hyperparameters, such as learning rate, batch size, and number of layers, to maximize model performance. I also use techniques such as grid search and random search to find the optimal combination of hyperparameters. Additionally, I use techniques such as early stopping and dropout to prevent overfitting and help the model achieve better results. By using these techniques, I am able to optimize my models and maximize model performance.

11. What is the purpose of exploratory data analysis?

Answer: Exploratory data analysis (EDA) is the process of analyzing a dataset by summarizing its main characteristics and by uncovering any hidden patterns or insights that may exist. It is a form of data analysis that focuses on exploring and understanding the data without making any assumptions about the structure of the data, or the relationships between different variables. EDA is usually the first step in the data analysis process, allowing data scientists to gain a better understanding of the dataset before applying any more advanced techniques. EDA involves techniques such as plotting data, computing summary statistics, and identifying correlations between different variables. By exploring the data in this way, data scientists can gain valuable insights into the data that can inform the decisions they make and the models they build.

12. What do you consider to be the most important skills for a data scientist?

The most important skills for a data scientist include problem-solving abilities, statistical and machine learning knowledge, data wrangling, communication and collaboration skills, knowledge of coding languages, and experience with data visualization. A data scientist should also be able to interpret and analyze complex data sets, develop meaningful insights, and build predictive models. They must also be comfortable with working with large amounts of data and be able to interpret the results from their analysis.

13. How do you approach solving a complex data problem?

When approaching a complex data problem, I start by understanding the problem and the data I have available to solve it. I then create a data pipeline to collect, store, and process the data. I use exploratory data analysis to identify patterns, trends, and relationships in the data. I use visualizations to identify insights in the data. From there, I use machine learning algorithms to build predictive models for the problem. Finally, I evaluate the model’s performance and make improvements to the model if necessary.

14. How do you handle missing data in a project?

When handling missing data in a project, I first identify the missing values in the dataset and decide what type of imputation technique to use. If the data is missing completely at random, I might use the mean or median of the data to fill in the missing values. If the data is missing not at random, I might use a predictive model to fill in the data. I might also use k-nearest neighbors or a clustering algorithm to fill in the missing data.

15. What do you think are the key components of a successful data science project?

The key components for a successful data science project include an understanding of the problem, an understanding of the data, an ability to identify patterns, trends, and relationships in the data, an ability to build predictive models, and an understanding of the performance of the models. Additionally, a successful data science project requires effective communication and collaboration between stakeholders, and a thorough evaluation to ensure that the project is successful.

16. How do you select the right machine learning algorithm for a particular dataset?

When selecting the right machine learning algorithm for a particular dataset, I start by understanding the type of problem I am trying to solve. Then, I choose the algorithm that best suits the problem. I consider factors such as the size of the dataset, the amount of data available, the type of problem, and the type of data. I also consider the complexity of the algorithm and its computational efficiency. Once I have selected the algorithm, I evaluate its performance to ensure that it is suitable for the problem.

17. How do you ensure the accuracy of your data?

To ensure the accuracy of my data, I start by verifying the source of the data and ensuring that the data is from a reliable source. I also clean and preprocess the data to make sure that it is accurate and free of errors. I then use exploratory data analysis techniques to identify any patterns, trends, or relationships in the data. Based on my findings, I can make corrections to any errors that I find. Finally, I use standard procedures such as cross-validation and random sampling to test the accuracy of the data.

18. What techniques do you use to evaluate your machine learning models?

When evaluating my machine learning models, I start by understanding the performance metrics that best suit the problem. Common metrics include accuracy, precision, recall, and F1 score. Additionally, I use cross-validation to measure the generalization performance of the model, and I also use techniques such as bootstrapping to measure the accuracy of the model. I also use other techniques such as A/B testing and backtesting to evaluate the performance of the model. Finally, I use visualization techniques such as confusion matrices and heatmaps to evaluate the performance of the model.

Tips on Preparing for a Amazon Data Scientist Interview

- Showcase your technical abilities. It’s important to be able to articulate your technical knowledge and experience. Prepare to discuss the technical skills you possess and how they are relevant to the role.

- Do your research. Take the time to read up on Amazon as a company, familiarize yourself with the products, services, and any other information you are able to find.

- Practice coding. Brush up on your coding skills prior to the interview. Familiarize yourself with the coding languages used for data science.

- Come prepared with questions. Have a few questions prepared for the interviewer. This will demonstrate that you have done your research and show that you are interested in the role.

- Know what skills are needed. Understand what skills are required to be successful in the role and demonstrate how you meet those requirements.

- Demonstrate your problem- solving skills. Prepare answers that showcase your ability to think through problems and provide solutions.

- Understand the interviewer’s expectations. Learn about the interviewer’s expectations for the role and make sure you are prepared to meet or exceed those expectations.

- Be aware of the AWS services. Understand the various Amazon Web Services (AWS) services that are used in data science, and be able to discuss how you would use them in the role.

- Be prepared to discuss your work experience. Be prepared to talk through data science projects you’ve done in the past and explain the decisions you made and the results you achieved.

- Highlight your communication skills. Demonstrate that you can effectively explain technical concepts to non- technical stakeholders.

- Understand your motivations. Explain why data science is the right fit for you and why you are excited to join Amazon.

- Prepare a portfolio. If you have any data science projects you’ve done, be prepared to discuss them.

Be sure to check out our resume examples, resume templates, resume formats, cover letter examples, job description, and career advice pages for more helpful tips and advice.

Conclusion

Overall, Amazon’s data scientist interview process is an intense but rewarding experience. By taking the time to prepare for the questions and answers, you will be able to demonstrate your knowledge and abilities in a way that makes you stand out from other potential candidates. With some dedication and hard work, you may find yourself in a highly coveted job as an Amazon data scientist.