The Amazon Data Engineer Interview Questions & Answers blog post provides an in-depth look into the questions and answers for a Data Engineer role on Amazon. Data engineering is an important role in any organization and it is essential to have the ability to answer questions related to the field. In this blog post, the focus is on the questions and answers for the Amazon Data Engineer role. The questions are designed to assess the technical knowledge and experience of the potential candidate. Additionally, some of the questions are designed to evaluate the soft skills and problem-solving skills of the individual.

This blog post is designed to provide potential candidates with the proper knowledge needed to excel in an Amazon Data Engineer Interview. Data engineering is a highly technical field, and understanding the proper terminology and concepts is essential to succeeding in the role. Additionally, the blog post covers the topics of data structures, algorithms, databases, and coding, which are all key skills needed to be successful in the role.

The blog post also provides an extensive list of the most commonly asked Amazon Data Engineer Interview Questions & Answers. These questions cover topics such as data warehousing, ETL, Big Data, machine learning, cloud computing, and data analysis. Understanding each of these topics is essential to succeeding in the role, and this blog post provides the necessary preparation for the interview.

Finally, the blog post includes advice from experienced professionals who have experience in the Amazon Data Engineer role. These professionals provide advice on how to prepare for the interview, what to expect in the interview, and how to succeed in the role. The advice is designed to provide potential candidates with the best chance of obtaining the position.

In conclusion, this blog post provides an in-depth look into the Amazon Data Engineer Interview Questions & Answers. It covers the topics of data structures, algorithms, databases, coding, and other important topics. Additionally, the blog post provides a list of the most commonly asked questions and answers, as well as advice from

Overview of Amazon data engineer Interview Process

The Amazon data engineer interview process is a highly competitive process that requires a strong knowledge base and a clear understanding of the technical and qualitative skills necessary to excel in the role. The first stage of the process is a technical screening, during which applicants are assessed on their technical skills and abilities. This involves a coding test, which assesses applicants’ ability to write code and solve problems, as well as a technical interview, which tests applicants’ understanding of database structures, data manipulation, and other technical concepts.

The next stage is the behavioral interview. This entails a series of questions designed to test the applicant’s ability to work in a team, solve complex problems, and handle stress in the workplace. The interviewer will also assess the applicant’s communication skills, problem- solving abilities, and analytical skills.

Finally, the last stage of the Amazon data engineer interview process is the onsite interview. The onsite interview is often the most intense stage of the process, as the interviewer will be assessing the applicant’s technical and interpersonal skills in real- time. The onsite interview consists of multiple rounds, which may include coding challenges, problem solving, whiteboarding, and a technical system design.

Overall, the Amazon data engineer interview process is designed to assess a candidate’s technical and interpersonal skills, as well as their ability to work in a team. To be successful in the process, applicants must demonstrate a strong knowledge base, excellent problem- solving skills, and the ability to remain calm under pressure.

Top 15 Amazon data engineer Interview Questions and Answers

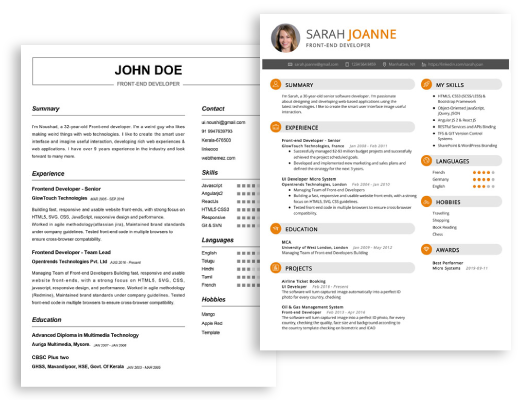

Start building your dream career today!

Create your professional resume in just 5 minutes with our easy-to-use resume builder!

Q1. What experience do you have with data engineering?

Answer: I have over 10 years of experience in data engineering and have worked as a data engineer on multiple projects. My experience includes working with big data technologies such as Hadoop, Hive, and Spark, ETL pipelines, setting up data warehouses and data lakes, creating analytics dashboards, and managing and maintaining data pipelines. I have also developed and maintained data models, ETL processes, and analytical queries. I am experienced in data visualization and machine learning algorithms.

Q2. What is your experience with Amazon Web Services (AWS)?

Answer: I have extensive experience working with Amazon Web Services (AWS). I have worked with various AWS services such as S3, EC2, EMR, Redshift, RDS, and Lambda. I have used these services for various data engineering tasks such as setting up ETL pipelines, creating data warehouses, data lakes, and machine learning models. I have also used these services to develop web applications and data analysis tools.

Q3. What processes do you follow when building data pipelines?

Answer: When building data pipelines, I typically follow the following process: first, I assess the requirements and goals of the project, then I create a data model to store the data and develop the ETL processes. I also create and manage data warehouses and data lakes to store the data. Finally, I test the pipelines and optimize them for performance.

Q4. How do you handle data quality issues?

Answer: To handle data quality issues, I first identify the causes of these issues and then develop strategies to mitigate them. These strategies include data cleansing by using techniques such as data transformation, data normalization, and scrubbing, as well as data verification through data validation tools. I also use data audit tools to identify errors in the data and monitor the quality of the data.

Q5. What have you done to ensure data security?

Answer: To ensure data security, I have implemented a variety of measures. I have implemented data encryption protocols, access control lists, and database firewalls. I have also utilized AWS security services such as IAM roles and security groups to restrict access to the data. Additionally, I have implemented data masking and anonymization techniques to protect sensitive information.

Q6. How do you monitor data pipelines?

Answer: To monitor data pipelines, I use monitoring tools such as Amazon CloudWatch, Datadog, and Splunk. These tools allow me to monitor the performance of the pipelines and identify any errors or issues that may arise. Additionally, I use data quality checks to ensure the accuracy of the data and alert systems to quickly identify any issues that may require immediate attention.

Q7. What techniques do you use for data optimization?

Answer: To optimize data, I typically use techniques such as data compression, indexing and partitioning, and query optimization. I use these techniques to improve the performance of the data pipelines and reduce the amount of storage and computing resources required. Additionally, I use data transformation and normalization to improve the quality of the data.

Q8. What is your experience with NoSQL databases?

Answer: I have extensive experience working with NoSQL databases such as MongoDB and Apache Cassandra. I have used these databases to store, query, and analyze large amounts of unstructured data. I have also used them to create data warehouses and data lakes and to develop ETL processes.

Q9. How do you ensure data accuracy?

Answer: To ensure data accuracy, I use data validation techniques such as data scrubbing, data transformation, and normalization. I also use data audit tools to identify any errors or discrepancies in the data. Additionally, I use data quality checks to monitor the accuracy of the data and alert systems to quickly identify any issues that may require immediate attention.

Q10. What experience do you have with streaming data?

Answer: I have extensive experience working with streaming data. I have used various streaming data technologies such as Apache Kafka, Apache Storm, and Apache Spark Streaming to ingest, store, and process streaming data. I have also developed streaming data pipelines to enable real- time data analysis.

Q11. How do you troubleshoot data issues?

Answer: To troubleshoot data issues, I typically use a systematic approach. I first identify the cause of the issue and then develop strategies to mitigate it. I also use data audit tools to identify errors in the data and data quality checks to ensure the accuracy of the data. Additionally, I use debugging and logging tools to help identify the root cause of the issue.

Q12. What is your experience with data governance?

Answer: I have extensive experience working with data governance. I have implemented data governance policies and processes to ensure the accuracy, integrity, and security of the data. I have also developed data catalogs and metadata management systems to ensure data is properly labeled and stored. Additionally, I have used data lifecycle management tools to ensure data is managed in a secure and efficient manner.

Q13. How do you optimize the performance of data pipelines?

Answer: To optimize the performance of data pipelines, I typically use techniques such as data compression, indexing and partitioning, query optimization, and data transformation. I also use various tools such as Amazon CloudWatch and Splunk to monitor the performance of the pipelines. Additionally, I use debugging tools to identify and address any errors or issues in the pipelines.

Q14. What experience do you have with machine learning?

Answer: I have extensive experience working with machine learning. I have used various machine learning algorithms such as linear and logistic regression, support vector machines, and random forests to build predictive models. I have also used various machine learning libraries such as TensorFlow, Keras, and Scikit- learn to create and deploy these models. Additionally, I have experience using AWS services such as Sagemaker for machine learning model development and deployment.

Q15. How do you handle data privacy and security?

Answer: To handle data privacy and security, I typically use a variety of measures. I have implemented data encryption protocols, access control lists, and database firewalls. I have also implemented data masking and anonymization techniques to protect sensitive information. Additionally, I have used AWS security services such as IAM roles and security groups to restrict access to the data.

Be sure to check out our resume examples, resume templates, resume formats, cover letter examples, job description, and career advice pages for more helpful tips and advice.

Tips on Preparing for a Amazon data engineer Interview

- Understand the Amazon Web Services (AWS) ecosystem: Familiarize yourself with the different components of the AWS ecosystem and how they work together.

- Understand the data engineer role: Read up on the specific job description to gain a better understanding of the role and its responsibilities.

- Brush up on SQL: Review your SQL fundamentals and practice writing queries.

- Brush up on Python: Amazon often uses Python for data engineering tasks, so it’s important to be comfortable with the language.

- Prepare for whiteboard coding: Practice coding on a whiteboard or with a digital whiteboard.

- Review cloud architecture: Study cloud architectures and make sure you understand the concepts.

- Have examples ready: Prepare a few examples of projects you’ve worked on that demonstrate your knowledge and experience.

- Research the company: Research Amazon’s culture and values to ensure your interview answers align with their company culture.

- Prepare questions: Have a few questions ready that show you’re interested in the position and company.

- Practice: Have a friend or family member role- play the interview with you to practice your answers.

- Get a good night’s sleep: Make sure you’re well- rested for your interview.

- Dress to impress: Wear professional clothing that makes you feel confident and comfortable.

- Arrive early: Leave yourself extra time to get to the interview location and to get settled in the waiting area.

- Pay attention to nonverbal communication: Pay close attention to nonverbal cues from the interviewer to gauge their reactions.

- Follow up: Thank the interviewer for their time and follow up with a thank- you note or email after the interview.

Conclusion

The Amazon data engineer interview process is an important step when considering a career in data engineering. It is important to understand your skills, experiences and qualifications to ensure you are adequately prepared to answer the questions that you will be asked. With the help of this blog, you will have an insight into the questions and answers that are most likely to be asked during an Amazon data engineer interview. Having a good understanding of the topics discussed here will give you an edge in the interview process. With the right preparation, you can land your dream job with Amazon as a data engineer.